Since the public release of ChatGPT in 2022, the number of humanitarian actors experimenting with AI and trialling AI-powered pilot projects has grown exponentially. This includes pilot projects involving generative AI models as well as other AI systems.

However, despite the uptick in AI interest and adoption, few agencies have shared specific details related to their work, such as who has built and provided the AI models, the locations into which the models are being deployed, and the specific aims of the project. Equally or perhaps more importantly, few if any of the humanitarian actors experimenting with AI have shared key insights derived from the success, limitations, and failures of their AI-related work.

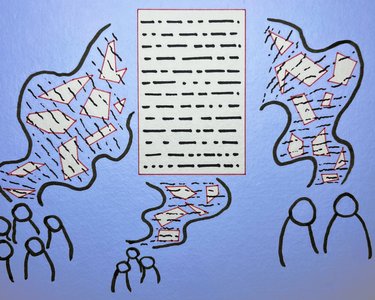

This hinders lesson learning and coordination between humanitarian actors and makes it more difficult for aid actors to broker equitable, effective, and values-driven relationships with AI and tech suppliers and procure safe and technologically assured AI models. The lack of transparency also undermines commitments by aid actors to engage communities in the design and delivery of humanitarian interventions. In fact, the extent to which crisis-affected populations know whether and to what extent AI is used in services and decisions that directly impact their well-being remains unknown.

So, how can the humanitarian sector share information about and improve transparency around their use of AI?

To address the dearth in information related to AI-enabled projects in humanitarian contexts, we partnered with glass.ai to map both live and closed projects using AI and/or machine learning to improve humanitarian action. The results of this mapping can be found here.

While a number of interesting themes emerged from this mapping, a few trends bear further exploration:

- A significant number of AI-related projects focused on mapping the impact of natural disasters and crises, particularly the impact on infrastructure. Increasingly granular maps that include details on damage to roads and buildings can be useful in planning and prioritising humanitarian responses, particularly in the immediate aftermath of a crisis or emergency. These types of projects have increased as the availability and amount of commercially available satellite images and AI models has increased. However, it remains to be seen if and to what extent these maps are made publicly available and in real-time so that frontline responders—including both large humanitarian agencies and grassroots organisations—as well as crisis-affected populations can use this information to improve well-being. Moreover, with access to increasingly accurate and rapidly produced damage assessments, there remains a risk that aid actors forego or reduce their reliance on tried and tested methods of and commitments to community participation to identify need and design humanitarian interventions.

- A number of projects focus on predicting patterns of conflict—such as the frequency of conflict-related incidents and the location or severity of incidents—as well as population movements. Many of these projects were designed and developed in the late 2010s and, according to publicly available information, only a few appear to be still in operation. This might indicate that these projects and use cases were potentially less effective than others or equally, and perhaps more likely, could suggest a shift away from more traditional machine learning models on which many of these projects were based towards Large Language Models (LLMs), like ChatGPT and others, particularly as the commercial availability of these models increase in 2022.

- Finally, and relatedly, only a handful of examples mention the use of LLMs, including chatbots. As this does not necessarily square with the high number of pilot projects of which the UKHIH is aware, it could suggest that few agencies are sharing information about if and how they are experimenting with LLMs. The UKHIH is aware of multiple pilot projects involving LLMs in humanitarian contexts, from mental health chatbots to automated information provision. Yet, the lack of public information about these pilots might be driven by the increasing levels of competition between aid actors over increasingly limited funding.

This mapping exercise is one of many actions needed to improve communication and transparency in the use of AI in humanitarian contexts, most importantly for crisis-affected communities.

We encourage humanitarian organisations, tech providers, and other stakeholders to contribute to this resource by sharing your insights, challenges, and successes. Openly exchanging knowledge about safe and effective AI tools not only strengthens individual efforts but also accelerates innovation and trust across the sector.

Together, we can build a future where AI is leveraged responsibly and effectively to improve humanitarian action.

Explore the Directory of AI-enabled humanitarian projects

You might also be interested in…