A newsletter on Responsible AI and Emerging Tech for Humanitarians

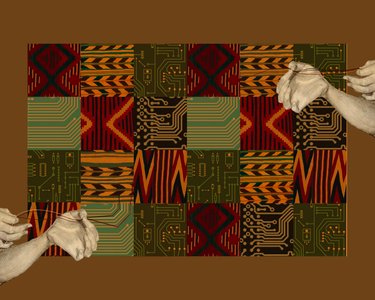

The Grand Bargain reminds us of the importance of localising aid, empowering affected communities, and amplifying their voices in decision-making. But as AI increasingly integrates into humanitarian efforts, we must ask: who benefits, and who is left behind?

Currently, AI often follows a top-down, extractive approach, where data from vulnerable populations fuels solutions designed far from those directly affected. This approach risks deepening inequities, eroding trust, and missing invaluable community insights.

Community engagement and public participation are crucial, but they must be conducted thoughtfully and avoid “participation washing” to ensure authenticity. Participatory methods can also shape outcomes, meaning we must be vigilant about how engagement is designed, and the information is shared.

This month, we explore how humanitarian AI programmes can prioritise the needs of affected communities. How do we engage them meaningfully without adding additional burdens? And how can their voices shape AI decisions to better reflect local needs?

Ultimately, communities themselves should have a say on the extent to which machines are involved in high-stakes decisions affecting their well-being—such as refugee status determination, access to services, and more. Let's dive in!

Affected Populations in Discussion (Podcast)

A conversation hosted by Brent Phillips, with Olubayo (Bayo) Adekanmbi(Data Science Nigeria), Shaza Alrihawi (Lifbi), Shruti Viswanathan and Helen McElhinney (CDAC Network).

This month’s podcast dives into the challenges and opportunities of community-centred AI in humanitarian contexts. From addressing cultural barriers to overcoming AI’s inherent opacity, the panel shares practical strategies and real-world lessons for community centred AI implementation.

🎧 Listen now on SoundCloud or or tune into the series via: itunes, Spotify.

Case study: Signpost AI - Supporting displaced communities

With over 1.2 billion people at risk of displacement by 2050, access to reliable information is critical. Where do I go? Where can I get food, shelter, or medical services? How do I apply for asylum?

SignpostChat, a generative AI-powered chatbot tailored to support displaced people with reliable information was developed to address this challenge by a consortium including IFRC, Mercy Corps and local partners. Signpost collaborates directly with impacted communities to ensure focus on what is relevant to them.

Tested in Greece, Italy, Kenya, and El Salvador, the chatbot delivers hyperlocal, context-aware information and refers complex queries to human agents. Built with a community-led approach, it emphasises:

- Ethical AI usage

- Local language and context adaptation

- A human-in-the-loop model to ensure cultural sensitivity

📖 Read more about Signpost AI

Spotlight: AI and the Global South

CARE International, in collaboration with Accenture, has published critical research on the role of Global South Civil Society Organisations (CSOs) in AI.

This research amplifies Global South voices and explores pathways for more inclusive and participatory artificial intelligence through its lifecycle and across AI governance. The authors interviewed 17 CSOs in 12 ‘Global South’ countries in Africa, Asia, Latin America, and the Middle East who share key aspirations, concerns, and expectations regarding AI’s influence on their communities. Their views are supplemented by multinational technology companies, iNGOs, and UN agencies to better understand ways to increase equitable decision-making in relation to AI.

The research examines three key tensions in the humanitarian and development context:

- AI in operations: Navigating the balance between efficiency and effectiveness.

- AI and the digital divide: Understanding AI as both a risk and potential equaliser

- Participation across the AI lifecycle: Moving from sporadic involvement to consistent meaningful inclusion.

It points to four main ways to better include civil society organisations (CSOs) in AI work. These are:

- helping more people understand AI and sharing knowledge between sectors,

- giving local communities a bigger voice in decisions about AI,

- supporting efforts to highlight how AI affects different groups and what outcomes are needed,

- and improving digital systems while making sure data is managed fairly.

📖 Read the full report

Editor's Choice

Curated articles, tools, and events on AI and humanitarian innovation.

- Participatory AI for humanitarian innovation (Nesta, 2021): A practical framework for implementing participatory AI, outlining varying levels of engagement— from consultation and contribution to collaboration and co-creation.

- The double edged sword of AI - Panel Discussion (World Summit AI, 2024): A discussion between Katya Klinova (United Nations), Paul Uithol (HOT), Helen McElhinney (CDAC Network), Paola Yela (IFRC) and Sarah Spencer (EthicAI)

- The risks and opportunities of AI on humanitarian action (Wilton Park, 2024): Advocates for ethical, locally-driven AI use to reduce bias, avoid cultural insensitivity, and set clear guidelines.

- The AI Decolonial Manyfesto: A call for a decolonial approach and community-driven, culturally diverse governance in AI to address historical injustices.

- The AI Index Report (Stanford University, 2024): Key trends in AI such as technical advancements, public perceptions of the technology, and the geopolitical dynamics surrounding its development

AI Safety Label

NESTA, in partnership with UKHIH, has been exploring the development of an AI Safety Label by engaging crisis-affected communities, humanitarians, and other stakeholders in earthquake-affected areas of Turkey. Unlike existing efforts, this initiative uniquely integrates community assessments into the assurance process - addressing a critical gap in the humanitarian sector, where community accountability has often been overlooked. By collaborating with local responders and humanitarian agencies, this work aims to build trust in AI-driven tools, foster consensus around an AI Safety Label, and guide ethical risk management. Find out more.

Upcoming Opportunities

Mercy Corps Ventures has launched the fourth round of its Crypto for Good Fund, offering equity-free grants of up to $100,000 to start-ups leveraging blockchain and Web3 technologies to enhance various areas incl. Humanitarian aid delivery in the Global South. By 20th December 2024.

FCDO invites proposals by 13 January 2025 to conduct research on how humanitarian actors can address the risks associated with AI systems so they can be used responsibly. Value up to £100,000.

The Robotics for Good Youth Challenge is a United Nations-based educational robotics competition that invites global youth teams to design, build, and program robots addressing issues in disaster response. Participants can join through in-person national events or by submitting video entries for evaluation by 1st April 2025.

As 2024 comes to an end, take a moment to revisit previous episodes of the Humanitarian AI Today podcast.

Disclaimer: The views expressed in the articles featured in this newsletter are solely those of the individual authors and do not reflect the official stance of the editorial team, any affiliated organisations or donors.